In the past, trade-offs between performance, power, and cost were primarily defined by large OEMs within the scope of industry-wide expansion roadmaps. Chipmakers design chips to meet the narrow specifications put forth by these OEMs. But as Moore's Law slows, and as more sensors and electronics generate more data everywhere, design goals and the means to achieve them are changing.

Some of the largest systems companies are already designing chips in-house to focus on specific data types and use cases. At the same time, traditional chipmakers are creating flexible architectures that can be reused and easily modified for a wider range of applications.

In this new design, the speed at which data needs to be processed and the accuracy of the results can vary greatly. Depending on the situation—for example, whether it will be used in a safety or mission-critical application, or if it is near other components that could generate heat or noise—architects can weigh raw performance, performance per watt, and total cost of ownership, including reliability sex and safety. This in turn determines the type of encapsulation, memory, layout, and how much redundancy is required.

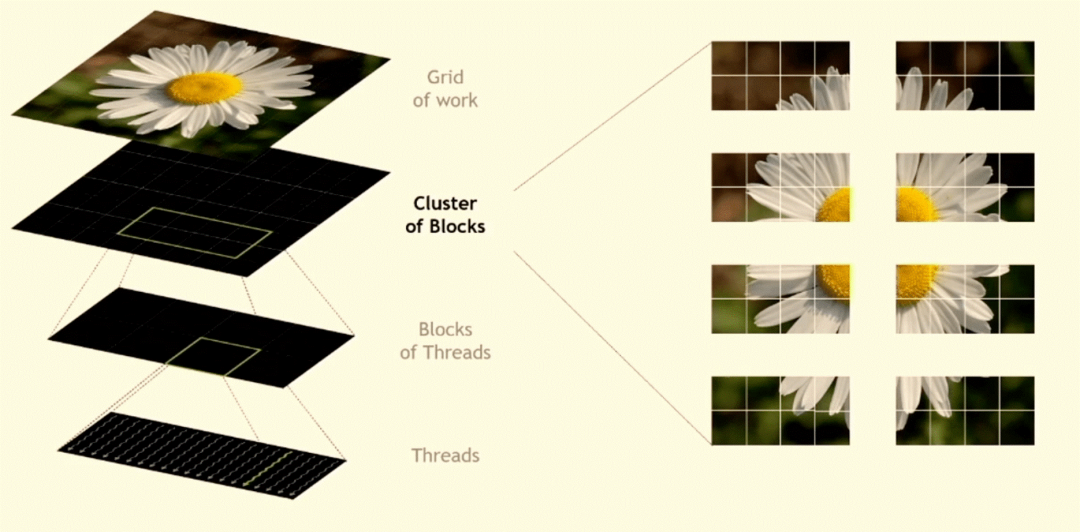

At the recent Hot Chips 34 conference, NVIDIA senior principal engineer Jack Choquette previewed the company's new 80 billion transistor GPU chips. The new architecture takes into account spatial locality, which allows data from different locations to be processed by available processing elements, and temporal locality, where multiple cores can operate on the data.

The goal is to allow more blocks to operate on pieces of data synchronously or asynchronously, for greater efficiency and speed. This contrasts with existing approaches, where all threads have to wait for other data to arrive before processing begins.

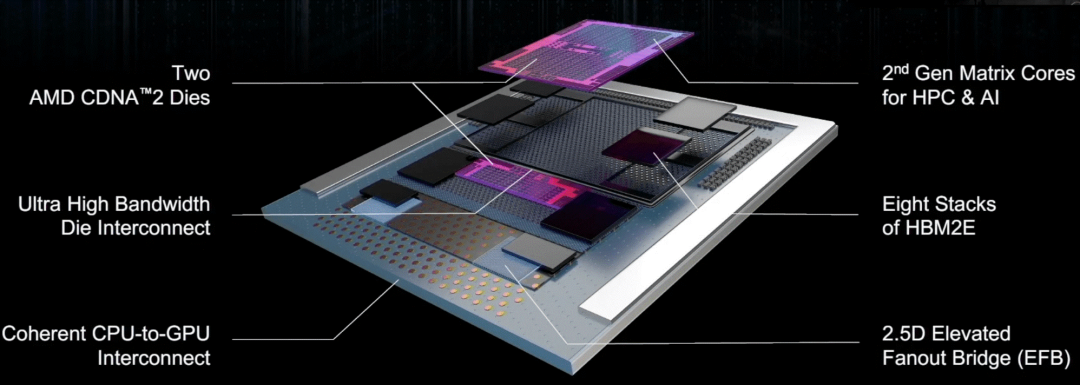

In AMD's design, the data path is widened for data forwarding and reuse. Like NVIDIA's architecture, the goal is to remove bottlenecks in the data path, simplify operations, and improve utilization of various compute elements. To improve performance, AMD eliminates the need for constant copying to back up memory, significantly reducing data movement.

AMD's new Instinct chips include a flexible high-speed I/O and a 2.5D elevated bridge connecting various computing elements. High-speed bridges were first commercialized by Intel with its Embedded Multi-Die Interconnect Bridge (EMIB), which enables two or more chips to act as one. Apple used this approach, bridging two Arm-based M1 SoCs to create its M1 Ultra chip.

All of these architectures are more flexible than previous versions, and the chiplet/tile approach offers large chipmakers a way to customize chips while still serving a broad customer base.

Tesla's data center chip architecture is a good example of how they want to be able to take advantage of multiple levels of parallelism - data and model-level parallelism at the training level, as well as the inherent parallelism that is being performed when training convolutions and matrix multiplications Parallelism in operations. We want it to be a fully programmable and flexible hardware.

The Difference

ASICs have always been custom, but with each new process node, costs have risen to the point that only the largest volume of applications, such as smartphones or PCs, are enough to recoup design and manufacturing costs.

To squeeze higher performance per watt from these designs, they are also optimizing the chip for specific software features and how the software utilizes the hardware—a complex and often iterative process that requires constant fine-tuning through regular software updates.

To save power and cost, design teams prioritize different functions based on the application and then package multiple chips together or divide into a single SoC based on specific design goals.

As more chipmakers adopt a chiplet approach, they need to consider a mix of critical and non-critical data paths. This involves everything from noise considerations to chip displacement in packages, thermal expansion coefficients due to different materials in those packages, and process variations in the components themselves. While companies like Arm, Synopsys (ARC processors), and a growing number of RISC-V vendors are doing thorough work on their IP, the number of edge cases and potential interactions is increasing.

All of this makes the design, verification and debugging process more difficult, and can create problems in manufacturing if the quantity and understanding of where anomalies are likely to arise is insufficient. This explains why more and more EDA, IP, test/analysis and security companies are offering services to complement the work of in-house design teams.

"It's no longer necessary to design a CPU to perform the x, y, and z functions for every workload without considering the overhead," said Sailesh Chittipeddi, executive vice president of Renesas Electronics. "That's why all these companies are now more vertical. They're driving the solutions they need. This includes artificial intelligence at the system level. It includes the interaction between electrical and mechanical properties, right down to where you place a specific connector. It's also driving more CAD companies to go to the system level support and system-level design.”

This shift is taking place in a growing number of vertical markets, from mobile phones and automobiles to industrial applications, and it is driving a wave of smaller acquisitions far below the radar as chipmakers look to target their hardware in a wide range of new markets wave.

However, domain-specific solutions have increased pressure on EDA companies to identify commonalities that can be automated. It is much easier to use planar chips developed at a single process node. But as more and more markets go digital—whether automotive, industrial, military/aeronautical, commercial, or consumer—their goals are becoming increasingly different.

This difference is expected to only increase as chiplets developed at different process nodes are developed for custom packages, which may be based on everything from fan-out pillars to full 3D-IC implementations. In some cases there may even be a combination of 2.5D and 3D-IC, which Siemens EDA has labelled as 5.5D.

The good news for EDA and IP companies is that this has significantly increased the need for simulation, simulation, prototyping and modeling. Large system vendors have also been pressuring EDA vendors to automate the design process for more systems companies, but there aren't enough numbers to warrant such an investment. Instead, systems companies have reached out to EDA and IP companies to provide expert services, moving from transactional relationships to deeper partnerships and giving EDA companies a deeper understanding of how and where the various tools are being used. Vulnerabilities for new opportunities.

"A lot of the new players are more vertically integrated, so they're doing more in-house," said Niels Faché, vice president and general manager of Design and Simulation at Keysight. "There's a lot more interest in system-level simulation. , and the need for collaborative workflows within and across companies is growing. We're also seeing more design iterations. So you have a development team, a quality team, and you're constantly updating the design."

For chip companies designing chips for OEMs, that's only part of the challenge. "If you look at the automotive market, it's not about designing a chipset anymore," Faché said. “In the initial stages, a chip company might use the software to build a reference design and set it up based on how it’s used. Then, the OEM will look to optimize. In doing so, it pushes the collaboration up the traditional food chain. For example, if you’re developing a radar chip , then it's not just a radar subsystem. It's a radar in the context of a larger technology stack."

The stack may include RF packages, antennas, and receivers, while the OEM builds the radio using EDA.

Application specific and general

A big challenge for design teams is that more designs become front-end. Rather than just creating the chip architecture and then addressing the details during the design process, more issues need to be addressed at the architectural level.

"There was a time when a chip company shipped a chip that consumed too much power, and the OEM wasn't happy with that," said Joe Sawicki, executive vice president of Siemens Digital Industries Software. "But you wouldn't know to just run applications. Artificial intelligence enables This problem gets bigger because it's not just a software problem. Now, you can run all this inference on it. If you don't care about latency, you can put a general-purpose chip in the cloud and you just talk to the cloud And get the data back. But if you have something in real time and it needs to respond immediately, you can’t afford that latency and you want low power. So, at least for accelerators, you want custom designs.”

Gordon Cooper, product marketing manager at Synopsys, agrees. "If you're using AI, are you using it 100 percent of the time, or are you happy to have it? If I just want to say I have AI on my chip, maybe I just need to use DSP for AI," he said. Say. "There's a trade-off, it depends on the context. If you want full-fledged AI 100% of the time, maybe you need to add external IP or additional IP."

A big challenge for AI is keeping devices up-to-date as algorithms are constantly updated. This becomes more difficult if the design is a one-off and everything is optimized for one or more algorithms. So while architectures need to be scalable in terms of performance, they also need to be scalable over time and in the context of other components in the system.

Software updates can wreak havoc on the clock. "Anything you do to the sync quality of a chip affects latency, performance, power consumption and time-to-market," Movellus CEO Mo Faisal said in a presentation at the 2022 AI Hardware Summit. - Reticle sized chips - you can optimize the core and make sure it plays nicely with the software. It's matrix multiplication, graph computation, the more cores you throw at it in parallel, the better. However, these chips are now facing challenges. It used to be a problem for one or two teams at Intel and AMD, now it's everyone's problem. "

Keeping everything in sync is becoming a process, not a single function. " You may have different workloads," Faisal said. " So you might just want to use 50 cores for one workload, and for the next workload you want to use 500 cores. But when you turn on the next 500 cores, you end up stressing the grid and lead to a decline."

There is also a problem with switching noise. In the past, some of these problems could be solved with redundancy. But at advanced nodes, this margin increases the time and energy required to move electrons through very thin wires, which in turn creates resistance and increases heat dissipation. Therefore, the trade-offs for each new node become more complex, and the interactions between the different components in the package are additive.

"If you look at 5G, it means something different for the car than it is for the data center or the consumer," Frank Schirrmeister, director of product marketing at Cadence, said in an interview. "They all have different latency throughputs. The same goes for AI/ML. It depends on the domain. Then, because everything is hyperconnected, it's not just within one domain. So it essentially requires many variants of the same chip , this is where heterogeneous integration gets interesting. The overall disintegration of the SoC comes in handy because you can do different levels of performance based on things like binning. But it's not a design by itself anymore because some rules No longer applies."

In Conclusion

The entire chip design ecosystem is constantly changing, and that extends all the way down to software. In the past, design teams could ensure that software written at a high level of abstraction would perform well, with regular improvements at the introduction of each new node.

Chipmakers are putting together plans to make them very specific, or generic enough to be able to leverage their architectures across multiple designs.